Summary

Disclaimer: This summary has been generated by AI. It is experimental, and feedback is welcomed. Please reach out to info@qconsf.com with any comments or concerns.

The presentation titled 'Designing AI Platforms for Reliability: Tools for Certainty, Agents for Discovery' given by Aaron Erickson discusses the integration of deterministic and probabilistic systems in AI platforms to enhance both reliability and adaptability.

Key Points:

- Dual Approach: The talk emphasizes the need for platforms that combine deterministic tools, which provide certainty needed for transactions and security, with probabilistic agents that enable adaptability and discovery in complex situations.

- Real-World Applications: Examples are provided from areas like anomaly detection and health diagnostics, illustrating how combining these approaches leads to more capable and trustworthy platforms.

- Agentic Systems: The presentation highlights the use of agentic systems and AI agents to govern GPU resources at global scale, showcasing NVIDIA's Llo11yPop project.

- Balance of Exploration and Precision: Erickson argues against purely stochastic approaches and promotes a balanced method where exploratory agents are grounded by frequent truth checks.

Challenges and Solutions:

- AI Implementation: The speaker provides insight into challenges companies face in AI deployment and shares strategies to overcome them, such as using purpose-built retrieval and analyst agents.

- System Design Considerations: Emphasizes careful orchestration of agents to solve specific problems while providing reliable outcomes.

The talk underscores the importance of designing AI platforms that integrate both deterministic and stochastic methods for enhanced reliability and discovery, applying these principles with real-world examples and practical strategies for implementation.

This is the end of the AI-generated content.

Abstract

Modern AI platforms don’t have to choose between deterministic precision and probabilistic exploration—they need both. Deterministic tools provide the certainty required for high-stakes operations like transactions, security, and compliance, while probabilistic agents bring adaptability and discovery to complex, evolving problems. In this talk, we’ll explore how to design platforms that combine these modes effectively: long-running agents grounded by frequent truth checks, tools that guarantee reliable outcomes where variability is unacceptable, and hybrid systems that thrive in uncertainty when the right tool for the job is probabilistic reasoning. Using real-world examples—from detecting anomalous clusters to health agents debating diagnostic hypotheses—we’ll show how this dual-layer approach leads to platforms that are not only more capable, but also more trustworthy.

Interview:

What is your session about, and why is it important for senior software developers?

Generally my session is about how one weaves together traditional software and platforms with new forms of agentic software that are usually stochastic. This is often framed as an either/or choice. This is one we should reject in favor of “right tool for the job”.

Why is it critical for software leaders to focus on this topic right now, as we head into 2026?

This is critical because nearly every company is doing work to deploy AI, yet often struggling to product ionize it, often due to mismatched expectations around what AI does well and what it does less well with current tools. Getting this right is critical.

What are the common challenges developers and architects face in this area?

The common challenge is often knowing when to apply a stochastic AI driven approach, what the patterns are in agent development (and how they are different), and how you interleave these well so that the whole is more than the sum of the parts. How, for example, do you build in a grounded deep research feature into a product in a manner that doesn’t run off into incoherent reasoning chains?

What's one thing you hope attendees will implement immediately after your talk?

One immediate implication is that we should be building tool catalogs for AI agents to use. This goes beyond mere “here are all your MCP endpoints”, and more about giving the agents context to properly select what tool to use for what problem in the first place.

What makes QCon stand out as a conference for senior software professionals?

What makes QCon stand out is that the presenters are builders, not marketers, not trying to drive hype.

Speaker

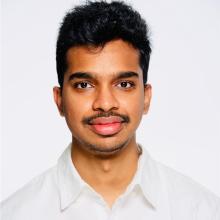

Aaron Erickson

Senior Manager and Founder of the DGX Cloud Applied AI Lab @NVIDIA, Previously Engineer @ThoughtWorks, VP of Engineering @New Relic, CEO and Co-Founder @Orgspace

Aaron Erickson founded the Applied AI Lab for DGX Cloud at NVIDIA, which specializes in building foundation models and agentic systems to solve broad industry problems like time series-based anomaly detection. Previously, he held engineering leadership roles at ThoughtWorks and New Relic before founding Orgspace, a startup that pioneered generative AI–driven organizational design. He is the author of The Nomadic Developer and Professional F# 2.0, and most recently launched NVIDIA’s Llo11yPop project, applying AI agents to govern GPU resources at global scale.